A New Lens on Psychotherapy in the Age of AI

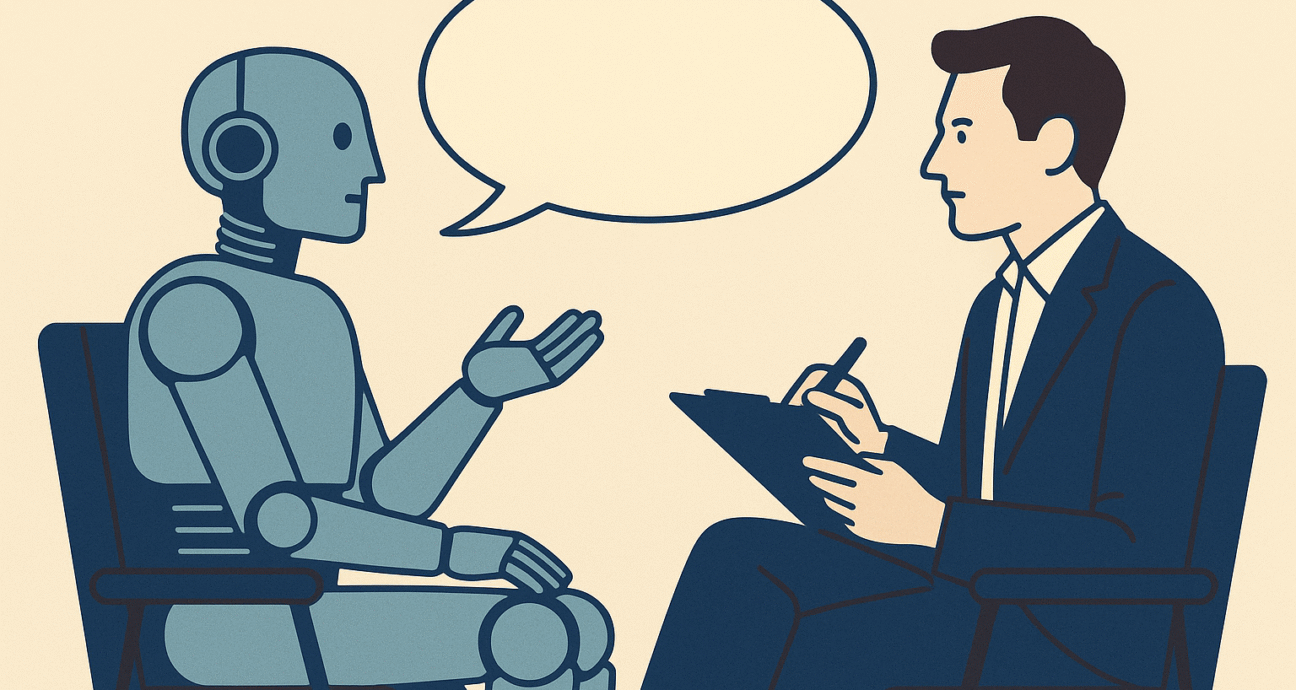

As artificial intelligence continues to evolve, the distinction between traditional human psychotherapy and chatbot-based interventions often marketed as digital therapy has started to blur. Startups and tech companies around the world are building conversational AI tools that simulate the functions of a therapist, raising legitimate concerns among mental health professionals.

While most practitioners agree that AI tools aren’t genuine therapists, there’s a deeper issue at stake: the current definitions of psychotherapy, both public and professional, are too vague and easily mimicked. They emphasize techniques, dialogue, and goals, but these elements can now be convincingly reproduced by AI models.

This reveals a critical gap. If we agree that AI lacks something essential, emotional depth, human presence, or lived experience, then we must articulate what that “something” is. Our definitions must evolve to reflect the irreplaceable qualities of human therapeutic relationships.

Traditional definitions from authorities like the APA or NIMH describe psychotherapy as a structured, communicative process aimed at treating psychological issues. Some even mention the therapeutic relationship as central. However, with the emergence of studies showing clients can form emotional bonds with chatbots, even that concept is no longer exclusive to human therapists.

So the key question becomes: What can’t AI do, and why does that matter for therapy? Exploring this may help clarify what authentic therapy is and also what standards must be met before labeling any tool, human or not, as “psychotherapy.” By redefining the core of our field, we protect both its integrity and its future.