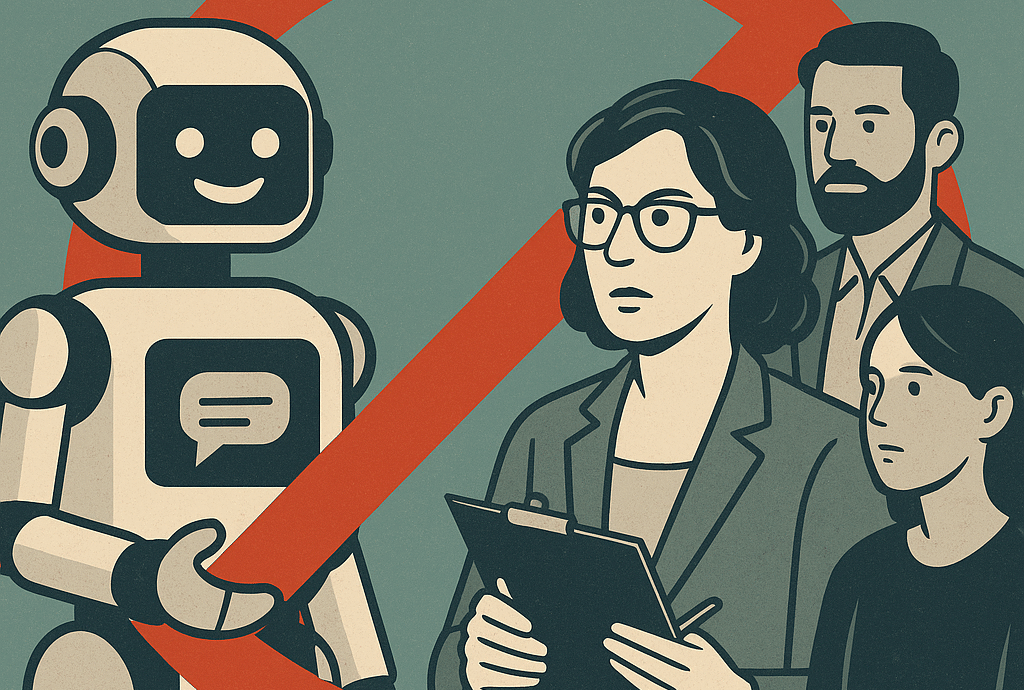

AI Chatbots and Mental Health: New State Restrictions in 2025

In 2025, three U.S. states—Utah, Nevada, and Illinois—introduced major legislation to restrict the role of AI chatbots in mental health care. These laws prevent AI from providing therapy, diagnoses, or treatment decisions, though their use is still permitted for administrative purposes such as scheduling and documentation. Utah’s ban began on May 7, Nevada followed on July 1, and Illinois implemented its measures on August 4. Penalties differ by state; for instance, Illinois can impose fines of up to $10,000 per violation.

Lawmakers cited patient safety and the need to clearly separate administrative tools from clinical practice. Nevada’s law prohibits any AI system from delivering professional mental or behavioral health services, while Utah requires disclosures, privacy safeguards, and restrictions to prevent chatbots from impersonating licensed clinicians. These moves reflect increasing unease over unregulated tools being used by vulnerable individuals, particularly in times of crisis.

Student Perspectives and Research

At Florida International University (FIU), researchers Dr. Jessica Kizorek and Dr. Otis Kopp studied how students view AI. Many worry that AI may replace jobs and reduce opportunities for graduates. Others express concerns about over-reliance, saying that excessive use makes people “stop thinking” and erodes critical problem-solving skills. Students also question how to balance human judgment with machine assistance in education and daily life. These concerns parallel debates in mental health, where AI lacks the empathy and accountability of a trained therapist but can still support activities like journaling, mindfulness, and creativity.

Impact on Universities

The bans also affect academic training. Psychology and counseling students in Illinois and Nevada will have no access to chatbot-based therapy in official settings, while Utah allows limited and regulated exploration of AI tools. This means future professionals will focus more on ethics, compliance, and oversight rather than direct therapeutic use.

Campus counseling centers nationwide continue to rely primarily on human-delivered services, though AI may still assist with intake forms or triage. Liability issues remain unresolved—if students use AI tools independently, responsibility often falls on developers unless a professional explicitly endorses the chatbot.

National Outlook

Other states like New Jersey, Massachusetts, and California are considering similar regulations. At the federal level, agencies such as the FDA may eventually create nationwide standards, though debate continues. One proposed bill would even prevent states from passing new AI laws for the next decade, creating tension between state and federal approaches.

Conclusion

These laws are not outright rejections of technology but an effort to define AI’s role. For now, AI is limited to administrative tasks, research, and personal reflection, while clinical care remains the domain of licensed professionals. For students entering psychology and counseling fields, the state of study will shape not only their exposure to AI tools but also how they learn to navigate the balance between innovation, regulation, and human-centered care.